In recent posts we focused on enhancing your organisations cybersecurity posture, which starts with a focus on establishing cloud security visibility and then a practical plan to secure the cloud network perimeter with AWS Web Application Firewall (or AWS WAF).

In this post, we focus on establishing perimeter security for workloads that are “protected” behind an Amazon CloudFront distribution. We deliberately placed the word protected between inverted commas – as to indicate that it is not always as protected as one would expect, unless a few very simple, but sometimes forgotten perimeter security practices have been followed. We will explore a common perimeter network misconception, which results in a well-protected edge, but leaves a gaping hole in the perimeter, which may impact on workload stability, performance, operating costs, and may ultimately lead to workload exploit.

To briefly recap, the effects of a cybersecurity breach are far reaching and impact both the organisation, its customers, and even the wider community depending on the nature of the business and the scope of the breach itself. A staggering figure according to recent studies is that the average cost of a data breach to a small Australian business is AU$200,000, with lost productivity ranging between 23 and 51 days. As a result of the global cybersecurity threats, the Australian Cyber Security Centre (ACSC) issued a notice in February 2020 to Australian organisations to adopt an enhanced cyber security posture.

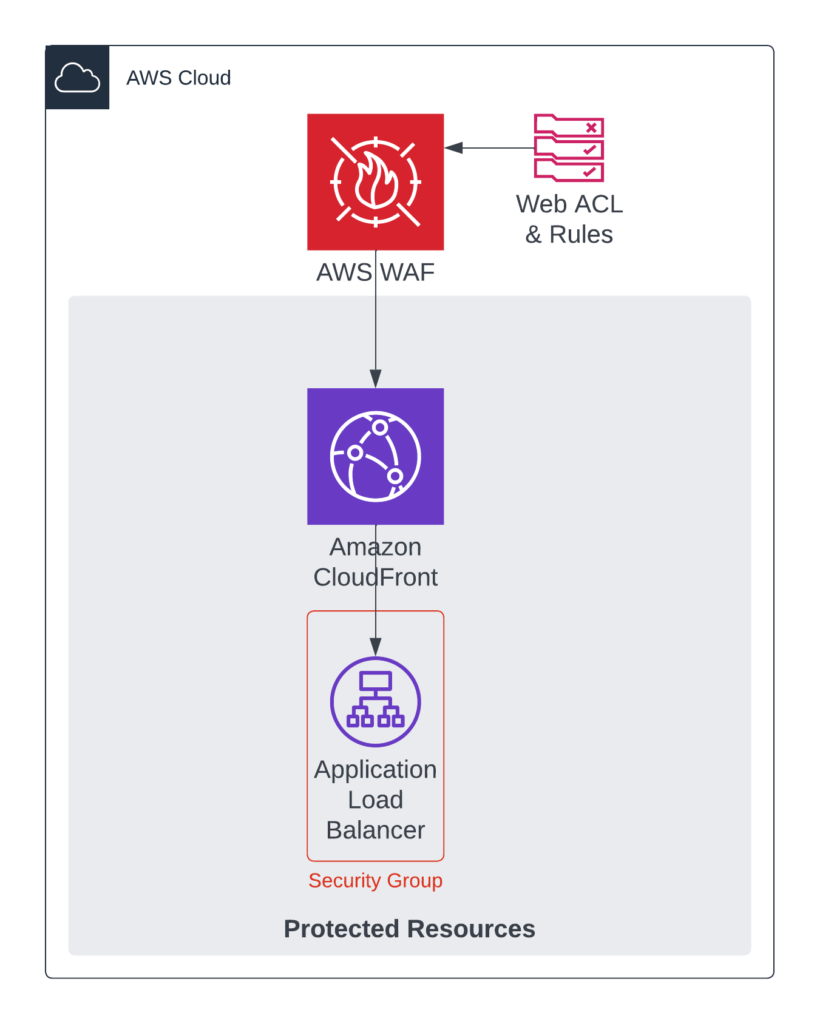

In the previous post we investigated perimeter security enhancement by using AWS Web Application Firewall. A common practice or pattern to improve the performance of a website or API and to guard against Denial-of-Service attacks is to deploy Amazon CloudFront, configure the necessary cache settings and in our scenario, point the CloudFront ORIGIN to our Application Load Balancer. Such a common deployment pattern is depicted in the diagram below.

A common overlooked perimeter component in such a deployment is the Application Load Balancer (ALB). In this architecture, the AWS Web Application Firewall inspects traffic and blocks malicious requests from the public Internet, however only traffic destined for Amazon CloudFront is protected.

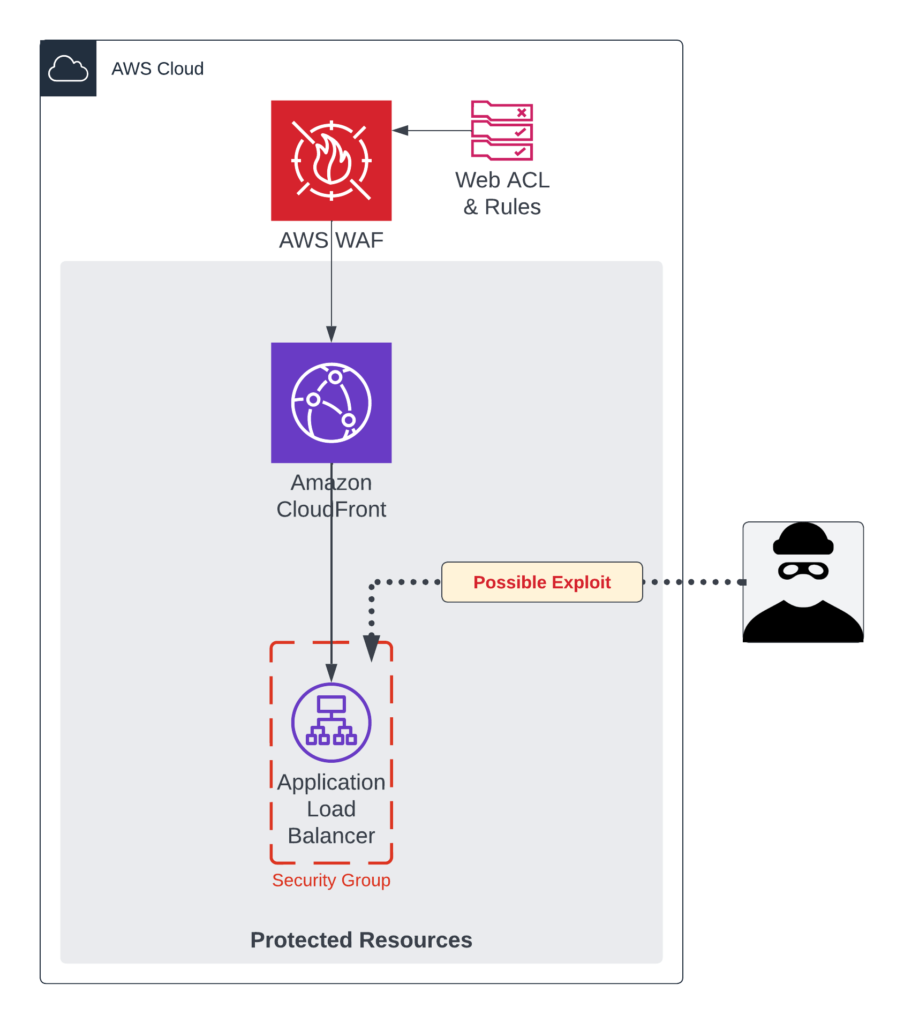

Highlighted in the diagram below, traffic that makes its way directly via the Application Load Balancer is not protected by the Web Application Firewall. We established in the previous post that an unpublished and totally unknown webserver and IP-address is very easy to identify and assess for exploits within minutes of it being deployed. An Application Load Balancer (ALB) is used in this example; however, the same security oversight is common for other CloudFront ORIGINs such as Classic Load Balancers, Network Load Balancers, and EC2 nodes.

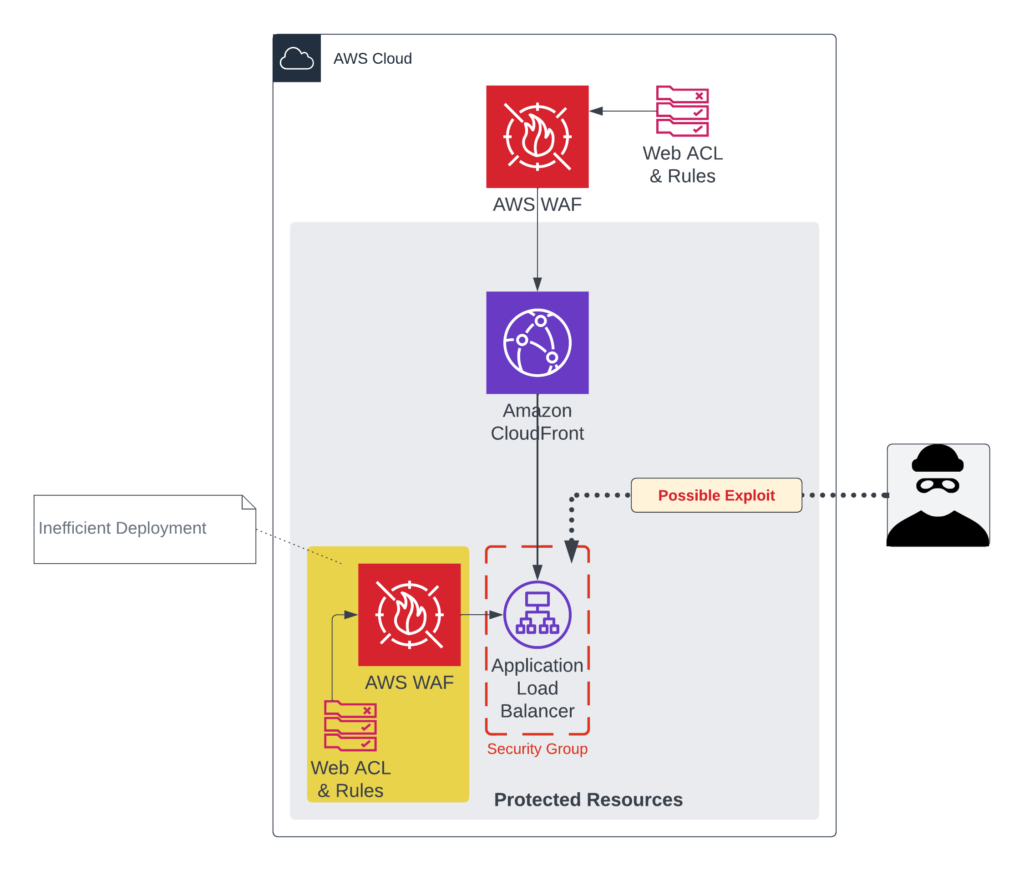

A possible solution to our exploit problem could be to attach an AWS WAF Web ACL to our Application Load Balancer as well, however this would be considered inefficient as traffic will be inspected twice and could result in an increased operating cost.

Our security challenge to remediate in this architecture is to only allow traffic which originates from Amazon CloudFront. Several options exist to implement this security control, however an easy and practical solution to improve our cybersecurity posture is by making use of AWS-managed prefix lists. AWS-managed prefix lists can be used within AWS Security Group rules to allow requests destined for, or originate from Amazon Web Services IP-ranges for the following services:

- Amazon S3

- DynamoDB

- Amazon CloudFront

The diagram below highlights the most implemented solutions to protect CloudFront origins (with a focus on AWS ALBs) to only allow traffic originating from Amazon CloudFront.

Option 1 sets a “secret header” (i.e., “x-origin-token”) with a large-random-string value within the CloudFront Origin configuration as a custom header, which is then validated within the Application Load Balancer forwarder-rules. Option 1 does deliver a great deal of security, by obscurity and is probably a good start in our practical cybersecurity enhancement journey. The challenge here is that traffic still makes its way to the load balancer, and a high-request-volume of DoS or other malicious traffic may start to impact on our Load Balancer operational costs. Reference the official AWS documentation for step-by-step instructions on how to configure CloudFront Origin Headers, and the Application Load Balancer Forwarder-Rules. This solution is only applicable when the CloudFront Origin is an application load balancer or when the header can be securely and safely validated without compromising the workload itself. There is no benefit of validating this header in the Java or PHP application you are trying to protect in the first place.

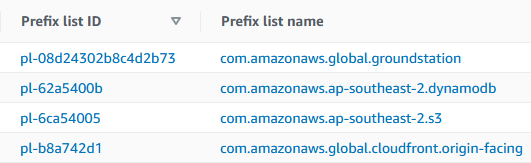

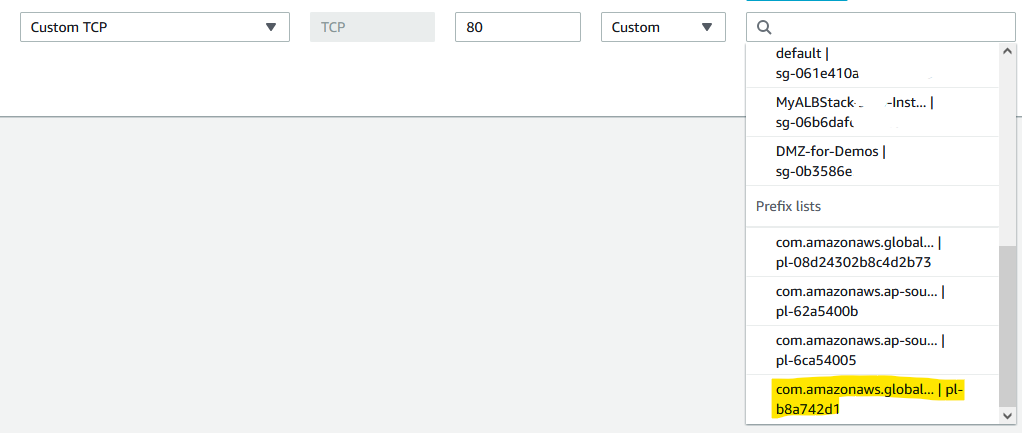

Option 2 is the most effective and modern approach to ensure that only traffic that originate from Amazon CloudFront is processed by the Application Load Balancer (EC2 or Network Load Balancer). This option makes use of the previously introduced AWS-managed prefix lists. A quick look at the Prefix Lists in AWS Console (under VPC services) we see the various lists, which include Amazon CloudFront.

Make note of the Prefix list ID as the Security Group interface is somewhat poorly designed to render prefix lists. The com.amazonaws. namespace takes up all the display real-estate and makes it impossible to see what you are selecting (as at 09/2022).

One last step to enhance our cybersecurity posture remains.

Configure the Security Group which is protecting the workload ingress point. In our example, the CloudFront origin is an application load balancer. Add the CloudFront prefix-list in the INBOUND rules of the security group, removing the 0.0.0.0/0 rule – and you are all set.

All these configurations can also be done in AWS CloudFormation, CDK, or your Infrastructure-as-Code framework of choice.

As indicated, Option 3 is considered legacy, however the concept of updating firewall rules within an On-Premises environment may still be very relevant under some use cases.

Option 4 should be considered when there is a security, compliance, or sensitive workload requirement which require the origin to only accept traffic from Amazon CloudFront IP-addresses and only the CloudFront distributions configured by your organisation. A few points to help rationalise the implementation of option 4 may include, but are not limited to:

- Authentication or Authorization behaviours managed within Lambda@Edge functions.

- Content adjustments managed within Lambda@Edge functions.

- Where Field-Level-Encryption is implemented for sensitive workloads.

In this post we looked at enhancing an organisations cybersecurity posture by improving common perimeter security misconfigurations, however it is important to note that the biggest threat to malware attacks remain internal staff with elevated network or system access. Issuing users with a secondary system identity/account for administrative purposes offer extra protection on the internal network. In addition, establishing a continuous security awareness program for all staff offer the best protection against malware attacks. Prevention is better than paying an expensive cybersecurity attack ransom and suffer from reputation damage.

Kick-start your business’ cybersecurity posture enhancement journey by visiting the ACSC’s Small Business Cyber Security Guide and the ACSC’s Strategies to Mitigate Cyber Security Incidents.

For reference, additional statistics on cybercrime can be explored here. A bit of trivia and latest news on global and local cybersecurity attacks can be found on the BBC website and here.